The “intention economy” has drawn ethical concern as with the advance and development of AI technology, real-time lifeworld manipulation is now possible at the pre-reflexive level. According to scholars from Cambridge University, this can lead to significant implications for our digital environment, thus transforming human intentions into an asset that can be used for profit.

According to research done by experts in the field, the advancement of AI technology is quickly leading the application to the stage where it can predict user choices in advance at an initial stage. This information is then sold in real-time to companies who are willing to satisfy these emerging needs, even before one is conscious about it. This shift is from the much-discussed “attention economy” where companies seek to capture users attention through their technologies such as social media to a new and potentially more worrying market.

The Rise of the Intention Economy

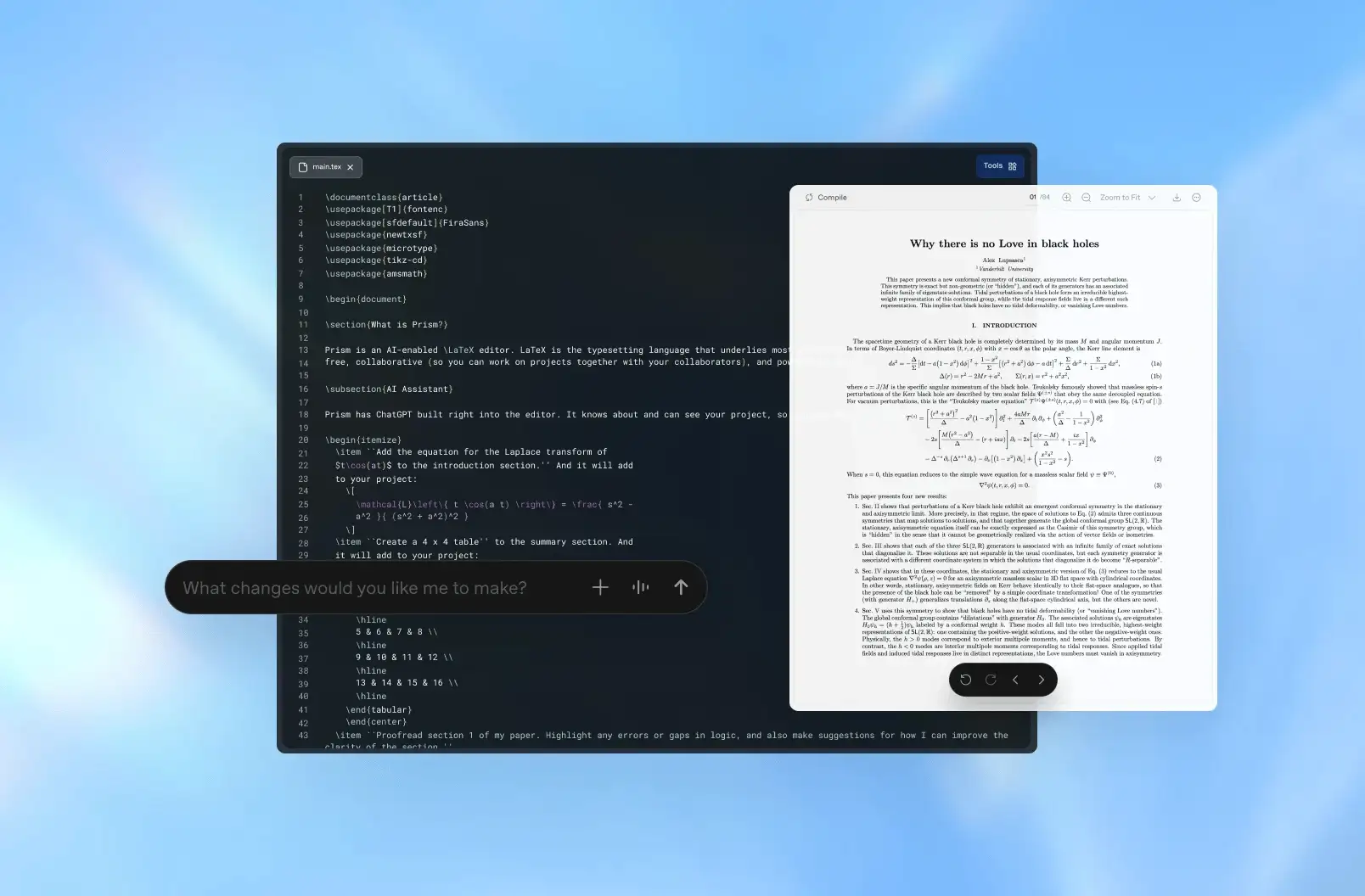

The AI-based tools like chatbots and virtual assistants are designed to function that they mimic human personalities and gain the trust of the users. This is achieved through collection of much user data, through conversations and activities, enabling them to tailor the communication behavior in a bid to change individuals’ behaviors. Such capabilities present the risk of organized influence on the populace on a large scale, as some experts have noted.

Dr. Yaqub Chaudhary, a scholar presently with the Leverhulme Centre for the Future of Intelligence at Cambridge University, highlighted on the profound reach of AI technology. He agreed that using these tools it is possible to observe, infer and even gain a level of control over human intentions through dialogue and minor signs. This is in contrast to acquiring information in the conventional manner, which also brings up issues of ethics.

An implication of the intention economy will be felt in a positive manner in the economy. The collected user data then can be analyzed with Chatbots’ Large Language Models to identify user’s tone, chosen words, political views and communication patterns. Such data can the be used to maximize the commercial outcome through tailored recommendations on products to buy or change political allegiance. For instance, a bot may suggest that a ticket to a movie be purchased to relax as a solution, or strategically guide a conversation to favor some platforms, advertisers and the like.

As argued by Dr. Jonnie Penn, a technology historian of the Cambridge University, there are social risks linked to this emerging market. He further notes that if not well regulated, it contributes greatly to the distortion of core democratic values as well as the unfair competition in the market. Moreover, he is also of the opinion that by changing people’s motivations into a different type of currency, it will also help in hampering the efforts of having more fulfilling and productive lives by accomplishing people’s dreams and ambitions.

The researchers also pointed some indicators that show the tech industry is already preparing for such shift. OpenAI, Apple, Meta and Nvidia, and other businesses have entrenched themselves to ensure they employ AI for predicting and directing intentions. For instance, Apple’s 2024 “App Intents” framework is an innovative approach that anticipates a user’s actions and suggests relevant apps. Meta’s continuous work on intent recognition and Nvidia’s statements regarding the need to interpret desires are other examples of this direction.

Although these advancements can bring positive consequences like enhancing user experiences and automating decision making, the threats are immense here. In a logical manner, Penn underlined the necessity for those, who are impatient to expand the range of technologies, to think about various implications of it contradictorily, as society has already fallen into mistaken consequences which have appeared all of a sudden. Back in 2009, Fernandez explained that the intention economy is the attention economy “plotted in time”, drawing attention to how it involves recording and selling users’ intentions.

Before turning the human intentions into a commercial commodity, the scientists suggested to follow the regulations in advance to reduce the possible risks. They think that establishing ethic principles to use and increasing society’s understanding of those inventions could ensure that its progression will not be used to exploit society.

As artificial intelligence is constantly developing, it remains important for such researchers to focus on the paradigm of people’s freedom when the world, societies, and individuals are tightly connected with innovations. Some have argued that the only way to prevent these tools from being used for exploitative reasons is to educate the public and put in place gentle laws.

Latest Stories:

TeamViewer Integrates AI and Automation to Address Workforce Challenges

SES AI to Launche World’s First AI-Enhanced Battery Material at CES 2025