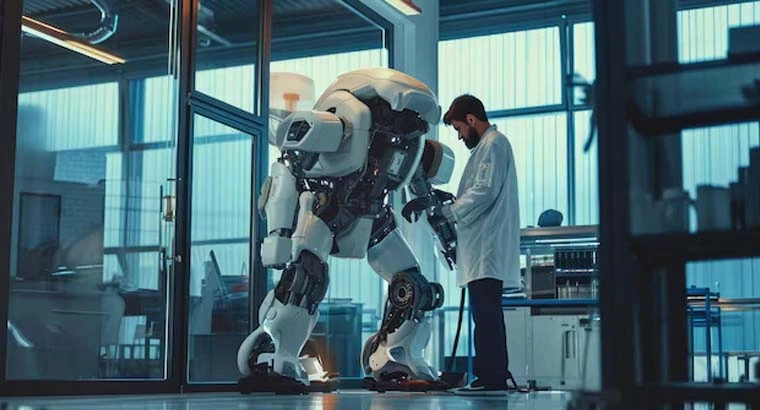

Mentee Robotics recently achieved a milestone in which its robot performed a back-to-back cycle of intricate task execution, from receiving a verbal command to navigating through the environment. This also includes the cognition of the setting, object sensing and localization, understanding objects, and cognition of the organic language. This is an innovation that combines several leading-edge technologies, such as self-propelled movement, scene cognition, object grappling, and the processing of natural language.

Mentee Robotics presented the prototype, slated for deployment in Q1 2025. It will have only camera-sensing capabilities and unique electric motors, providing unmatched dexterity and comprehensive AI integration. Due to this integration of AI, complex reasoning becomes possible, as well as task completion ability or even conversational abilities, enabling learning on the fly of innovative tasks and ,hence, more robotic autonomy than ever before.

Prof. Amnon Shashua (AI), Prof. Lior Wolf (computer vision), and Prof. Shai Shalev-Shwartz (ML) worked secretively for two years to develop an all-purpose household-industrial humanoid before Mentee Robotics went into operation two years ago.

This Menteebot prototype encompasses every operational stratum with AI at the core, adopting state-of-the-art technologies and creative methodologies. The robot moves using a Simulator-to-Reality (Sim2Real) machine cognition initiative where reinforcement learning comes to pass on a simulated rendition of the robot with infinite training information available for it to train on. Afterwards, the learned behaviors are applied in real-world settings that need the least amount of information.

NeRF-related algorithms enable real-time mapping of environments, which exemplifies scenes based on the most recent 3D neural network technology. Within these cognitive maps, semantics exist, allowing robots to ask questions about specific locations or items. This allows them to localize themselves within the 3D map, automatically planning dynamic paths while avoiding dynamic obstacles.

Large Language Models (LLMs), which are generally reliant on transformers, play a seminal role in understanding commands and “thinking through” the vital tips to complete a given task. Robots must have highly integrated motive power and agility to balance themselves while lifting objects or extending their hands.

During this initial period, a complete back-to-back cycle has been demonstrated, beginning with spoken dominance and progressing through navigation, motion, setting cognition, object sensing and localization, and natural language comprehension in complex tasks. This preview is not intended for deployment.

Prof. Amnon Shashua, CEO of Mentee Robotics, expressed his enthusiasm in his address regarding the convergence of diverse technologies that contributed to this accomplishment. He added that Mentee Robotics is nearing completion of computer vision, natural language comprehension, and highly detailed simulators with the processes required to simulate the physical world.

Shashua further stated that Mentee Robotics considers overlapping to be a foundation for assuring future general-purpose bi-pedal robots with intelligence capable of performing housework and learning by imitating tasks they were not previously trained for, capable of traveling universally (as humans do).

Mentee Robotics has reached a significant milestone in the field of robotics and AI integration. The firm was able to create a robot system that can perform difficult tasks on its own in real-world scenarios by combining modern technologies such as computer vision, NL processing, simulation-based training, and reinforcement learning.

This invention has far-reaching implications, paving the way for a new era of automation and robotic support systems in both the home and industry. Further technical breakthroughs will likely increase the fusion between AI and robotics, allowing robots to do complex tasks more efficiently, adaptably, and autonomously.